library(devtools)

install_github("guilhermegarcia/fonology")Fonology package

Phonological Analysis in R

The

The Fonology package (Garcia, 2025) provides different functions that are relevant to phonology research and/or teaching. If you have any suggestions or feedback, please visit the GitHub page of the project. To install the package, you will need to function install_github() from the devtools package (see below).

Italian is now part of the package (ipa() function and stopwords_it data). Stopwords have been improved for all languages (based on the stopwords package instead of tm). Open an issue with your corrections to help improve the package, please!

How to install

Main functions and data

getFeat()andgetPhon()to work with distinctive featuresipa()phonemically transcribes words (real or not) in Portuguese, French, Spanish and Italianmaxent()builds a MaxEnt Grammar (see alsonhg())syllable()extracts syllabic constituentssonDisp()calculates the sonority dispersion of a given demisyllable or the average dispersion for a set of words—see alsomeanSonDisp()for the average dispersion of a given wordwug_pt()generates hypothetical words in PortuguesebiGram_pt()calculates bigram probabilities for a given wordplotVowels()generates vowel trapezoidsplotSon()plots the sonority profile of a given wordipa2tipa()translates IPA sequences intotipasequences (also seeipa2typst())monthsAge()andmeanAge()pslcontains the Portuguese Stress Lexiconpt_lexcontains a simplified version ofpslstopwords_pt,stopwords_fr,stopwords_it, andstopwords_spcontain stopwords in Portuguese, French, Italian and Spanish

Distinctive features

The function getFeat() requires a set of phonemes ph and a language lg. It outputs the minimal matrix of distinctive features for ph given the phonemic inventory of lg. Five languages are supported: English, French, Italian, Portuguese, and Spanish. You can also use a custom phonemic inventory. See examples below.

The function getPhon() requires a feature matrix ft (a simple vector in R) and a language lg. It outputs the set of phonemes represented by ft given the phonemic inventory of lg. The languages supported are the same as those supported by getFeat(), and you can again provide your own phonemic inventory.

library(Fonology)

getFeat(ph = c("i", "u"), lg = "English")

#> [1] "+hi" "+tense"

getFeat(ph = c("i", "u"), lg = "French")

#> [1] "Not a natural class in this language."

getFeat(ph = c("i", "y", "u"), lg = "French")

#> [1] "+syl" "+hi"

getFeat(ph = c("p", "b"), lg = "Portuguese")

#> [1] "-son" "-cont" "+lab"

getFeat(ph = c("k", "g"), lg = "Italian")

#> [1] "+cons" "+back"library(Fonology)

getPhon(ft = c("+syl", "+hi"), lg = "French")

#> [1] "u" "i" "y"

getPhon(ft = c("-DR", "-cont", "-son"), lg = "English")

#> [1] "t" "d" "b" "k" "g" "p"

getPhon(ft = c("-son", "+vce"), lg = "Spanish")

#> [1] "z" "d" "b" "ʝ" "g" "v"library(Fonology)

getFeat(ph = c("p", "f", "w"),

lg = c("a", "i", "u", "y", "p",

"t", "k", "s", "w", "f"))

#> [1] "-syl" "+lab"

getPhon(ft = c("-son", "+cont"),

lg = c("a", "i", "u", "s", "z",

"f", "v", "p", "t", "m"))

#> [1] "s" "z" "f" "v"IPA transcription

The function ipa() takes a word (or a vector with multiple words, real or not) in Portuguese, French or Spanish in its orthographic form and returns its phonemic (i.e., broad) transcription, including syllabification and stress. The accuracy of grapheme-to-phoneme conversion is at least 80% for all three languages. Narrow transcription is available for Portuguese (based on Brazilian Portuguese), which includes secondary stress—this can be generated by adding narrow = T to the function. Run ipa_pt_test() and ipa_sp_test() for sample words in both languages. By default, ipa() assumes that lg = "Portuguese" (or lg = "pt") and narrow = F.

ipa("atlético")

#> 1

#> "a.ˈtlɛ.ti.ko"

ipa("cantalo", narrow = T)

#> 1

#> "kãn.ˈta.lʊ"

ipa("antidepressivo", narrow = T)

#> 1

#> "ˌãn.t͡ʃi.ˌde.pɾe.ˈsi.vʊ"

ipa("feris")

#> 1

#> "fe.ˈris"

ipa("mejorado", lg = "sp")

#> 1

#> "me.xo.ˈɾa.do"

ipa("nuevos", lg = "sp")

#> 1

#> "nu.ˈe.bos"

ipa("informatique", lg = "fr")

#> [1] "ɛ̃.fɔʁ.ma.tik"

ipa("acheter", lg = "fr")

#> [1] "a.ʃə.te"A more detailed function, ipa_pt(), is available for Portuguese only. In it, stress is assigned based on two scenarios. First, real words (non-verbs) have their stress assignment derived from the Portuguese Stress Lexicon (Garcia, 2014)—if the word is listed there. Second, nonce words follow the general patterns of Portuguese stress as well as probabilistic tendencies shown in my work (Garcia, 2017a, 2017b, 2019). As a result, a nonce word may have antepenultimate stress under the right conditions based on lexical statistics in the language. Likewise, words with other so-called exceptional stress patterns are also generated probabilistically (e.g., LH] words with penultimate stress). Stress and weight are also used to apply both spondaic and dactylic lowering to narrow transcriptions, following work such as Wetzels (2007). Secondary stress is provided when narrow = T. For ipa(), stress is not probabilistic (and therefore not variable): it merely follows the orthography as well as the typical stress rules in Portuguese (and Spanish).

There are several assumptions about surface-forms when narrow = T (i.e., for Portuguese). Most of these assumptions can be adjusted. Diphthongization, for example, is sensitive to phonotactics. A word such as CV.ˈV.CV will be narrowly transcribed as ˈCGV.CV (except when the initial consonant is an affricate (allophonic), which seems to lower the probability of diphthongization based on my judgement). Diphthongization is not applied if the onset is complex. Needless to say, these assumptions are based on a particular dialect of Brazilian Portuguese, and I do not expect all of them to seamlessly apply to other dialects (although some assumptions are more easily generalizable than others).

Narrow transcription also includes (final) vowel reduction, voicing assimilation, l-vocalization, vowel devoicing, palatalization, and epenthesis in sC clusters and other consonant sequences that are expected to be repaired on surface forms (e.g., kt, gn). Examples can be generated with the function ipa_pt_test(). Finally, it’s important to note that the goal of the ipa() function is phonemic transcription, not narrow phonetic transcription. Furthermore, there are certain limitations imposed by ASCII when it comes to specific phonetic diacritics (e.g., super- and subscript symbols, which affects secondary articulation).

Use ipa_pt() if you have nonce words as well as real words in Portuguese and you’d like to generate stress probabilistically based on the lexical statistics in the language. Note that ipa_pt() is not vectorized. Use ipa() if you just want to transcribe a large number of words (real or not) in Portuguese or Spanish and you don’t care about probabilistic stress assignment (i.e., you’re fine with categorical stress assignment). 99% of the time, you will use ipa().

Helper functions

If you plan to tokenize texts and create a table with individual columns for stress and syllables, you can use some simple additional helper functions. For example, getWeight() will take a syllabified word and return its weight profile (e.g., getWeight("kon.to") will return HL). The function getStress()1 will return the stress position of a given word (up to preantepenultimate stress)—the word must already be stressed, but the symbol used can be specified in the function (argument stress). The function can instead extract the stressed syllable with the argument syl = TRUE. Finally, countSyl() will return the number of syllables in a given string, and getSyl() will extract a particular syllable from a string. For example, getSyl(word = "kom-pu-ta-doɾ", pos = 3, syl = "-") will take the antepenultimate syllable of the string in question (you can set the direction of the parsing with the argument dir). The default symbol for syllabification is the period.

Here’s a simple example of how you could tokenize a text and create a table with coded variables using the functions discussed thus far (and without using packages such as tm or tidytext)—note also the function cleanText().

library(tidyverse)

text = "Por exemplo, em quase todas as variedades do português..."

d = tibble(word = text |>

cleanText())

d = d |>

mutate(IPA = ipa(word),

stress = getStress(IPA),

weight = getWeight(IPA),

syl3 = getSyl(IPA, 3),

syl2 = getSyl(IPA, 2),

syl1 = getSyl(IPA, 1),

syl_st = getStress(IPA, syl = TRUE)) |> # get stressed syllable

filter(!word %in% stopwords_pt) # remove stopwords| word | IPA | stress | weight | syl3 | syl2 | syl1 | syl_st |

|---|---|---|---|---|---|---|---|

| quase | ˈkwa.ze | penult | LL | NA | kwa | ze | kwa |

| variedades | va.ri.e.ˈda.des | penult | LLH | e | da | des | da |

| português | por.tu.ˈges | final | HLH | por | tu | ges | ges |

We often need to extract onsets, nuclei, codas and rhymes from syllables. That’s what syllable() does: given a syllable (phonemically transcribed), the function returns a constituent of interest. Let’s add columns to d where we extract all constituents of the final syllable (syl1 column).

d = d |>

select(-c(syl3, syl2, stress)) |>

mutate(on1 = syllable(syl = syl1, const = "onset"),

nu1 = syllable(syl = syl1, const = "nucleus"),

co1 = syllable(syl = syl1, const = "coda"),

rh1 = syllable(syl = syl1, const = "rhyme"))| word | IPA | weight | syl1 | syl_st | on1 | nu1 | co1 | rh1 |

|---|---|---|---|---|---|---|---|---|

| quase | ˈkwa.ze | LL | ze | kwa | z | e | NA | e |

| variedades | va.ri.e.ˈda.des | LLH | des | da | d | e | s | es |

| português | por.tu.ˈges | HLH | ges | ges | g | e | s | es |

It’s important to decide whether we want to count glides as part of onsets or codas, or whether we want them to be included in nuclei only. By default, syllable() assumes that all glides are nuclear. You can change that by setting glides_as_onsets = T and glides_as_codas = T (both are set to F by default).

If you have a considerably large number of words to analyze with functions such as ipa() or syllable(), it’s much faster to first run the functions on types and then extend the variables created to all tokens (say, by using right_join() from dplyr).

IPA transcription of lemmas

You can easily combine Fonology with other packages that have tagging capabilities. In the example below, we import a short excerpt of Os Lusíadas, tag it using udpipe (Wijffels, 2023), and transcribe only the nouns in the data.

library(udpipe)

# Download model for Portuguese:

pt = udpipe_download_model(language = "portuguese-gsd")

udmodel_pt = udpipe_load_model(file = "portuguese-gsd-ud-2.5-191206.udpipe")

txt_pt = read_lines("data_files/lus.txt") |>

str_to_lower()

set.seed(1)

annotation_pt = udpipe_annotate(udmodel_pt, txt_pt) |>

as_tibble() |>

select(sentence, token, lemma, upos)

lusiadas = annotation_pt |>

select(lemma, upos) |>

filter(upos == "NOUN",

!is.na(lemma)) |>

mutate(ipa = ipa(lemma),

stress = getStress(ipa)) |>

select(-upos) |>

ungroup()| lemma | ipa | stress |

|---|---|---|

| arma | ˈar.ma | penult |

| barão | ba.ˈrãw̃ | final |

| praia | ˈpra.ja | penult |

| mar | ˈmar | final |

| taprobana | ta.pro.ˈba.na | penult |

Probabilistic grammars

The function maxent() estimates weights in a Maximum Entropy Grammar (Goldwater & Johnson, 2003; Hayes & Wilson, 2008; Wilson, 2006) given a tableau object containing inputs, outputs, constraints, violations and observations (see documentation). The function returns a list with different objects, including learned weights, BIC value and predicted probabilities for each output. If the reader wishes to pursue a comprehensive MaxEnt analysis, I strongly recommend the maxent.ot package, which is dedicated exclusively to MaxEnt grammars (Mayer et al., 2024). Here’s an example of maxent() in action.

maxent_data <- tibble::tibble(

input = rep(c("pad", "tab", "bid", "dog", "pok"), each = 2),

output = c("pad", "pat", "tab", "tap", "bid", "bit", "dog", "dok", "pog", "pok"),

ident_vce = c(0, 1, 0, 1, 0, 1, 0, 1, 1, 0),

no_vce_final = c(1, 0, 1, 0, 1, 0, 1, 0, 1, 0),

obs = c(5, 15, 10, 20, 12, 18, 12, 17, 4, 8)

)

maxent(tableau = maxent_data)

#> $predictions

#> # A tibble: 10 × 12

#> input output ident_vce no_vce_final obs harmony max_h exp_h Z obs_prob

#> <chr> <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

#> 1 pad pad 0 1 5 0.639 0.639 1 2.79 0.25

#> 2 pad pat 1 0 15 0.0541 0.639 1.79 2.79 0.75

#> 3 tab tab 0 1 10 0.639 0.639 1 2.79 0.333

#> 4 tab tap 1 0 20 0.0541 0.639 1.79 2.79 0.667

#> 5 bid bid 0 1 12 0.639 0.639 1 2.79 0.4

#> 6 bid bit 1 0 18 0.0541 0.639 1.79 2.79 0.6

#> 7 dog dog 0 1 12 0.639 0.639 1 2.79 0.414

#> 8 dog dok 1 0 17 0.0541 0.639 1.79 2.79 0.586

#> 9 pok pog 1 1 4 0.693 0.693 1 3.00 0.333

#> 10 pok pok 0 0 8 0 0.693 2.00 3.00 0.667

#> # ℹ 2 more variables: pred_prob <dbl>, error <dbl>

#>

#> $weights

#> ident_vce no_vce_final

#> 0.05410679 0.63904039

#>

#> $log_likelihood

#> [1] -78.72152

#>

#> $log_likelihood_norm

#> [1] -7.872152

#>

#> $bic

#> [1] -152.8379Finally, a couple of functions are dedicated to Noisy Harmonic Grammars. These are pedagogical tools that can be used to demonstrate how probabilities are generated given constraint weights and violation profiles for different candidates. The function nhg() takes a tableau object and returns predicted probabilities given n simulations. The user can also set the standard deviation for the noise used. The function plotNhg() can be helpful to visualize how different standard deviations affect probabilities over candidates after 100 simulations.

Sonority

There are three functions in the package to analyze sonority. First, demi(word = ..., d = ...) extracts either the first (d = 1, the default) or second (d = 2) demisyllables of a given (syllabified) word (or vector of words. Second, sonDisp(demi = ...) calculates the sonority dispersion score of a given demisyllable, based on Clements (1990) (see also Parker (2011)). Note that this metric does not differentiate sequences that respect the sonority sequencing principle (SSP) from those that don’t, i.e., pla and lpa will have the same score. For that reason, a third function exists, ssp(demi = ..., d = ...), which evaluates whether a given demisyllable respects (1) or doesn’t respect (0) the SSP. In the example below, the dispersion score of the first demisyllable in the penult syllable is calculated—ssp() isn’t relevant here, since all words in Portuguese respect the SSP.

example = tibble(word = c("partolo", "metrilpo", "vanplidos"))

example = example |>

rowwise() |>

mutate(ipa = ipa(word),

syl2 = getSyl(word = ipa, pos = 2),

demi1 = demi(word = syl2, d = 1),

disp = sonDisp(demi = demi1),

SSP = ssp(demi = demi1, d = 1))| word | ipa | syl2 | demi1 | disp | SSP |

|---|---|---|---|---|---|

| partolo | par.ˈto.lo | to | to | 0.06 | 1 |

| metrilpo | me.ˈtril.po | tril | tri | 0.56 | 1 |

| vanplidos | vam.ˈpli.dos | pli | pli | 0.56 | 1 |

You may also want to calculate the average sonority dispersion for whole words with the function meanSonDisp(). If your words of interest are possible or real Portuguese words, they can be entered in their orthographic form. Otherwise, they need to be phonemically transcribed and syllabified. In this scenario, use phonemic = T.

meanSonDisp(word = c("partolo", "metrilpo", "vanplidos"))

#> [1] 1.53Plotting sonority

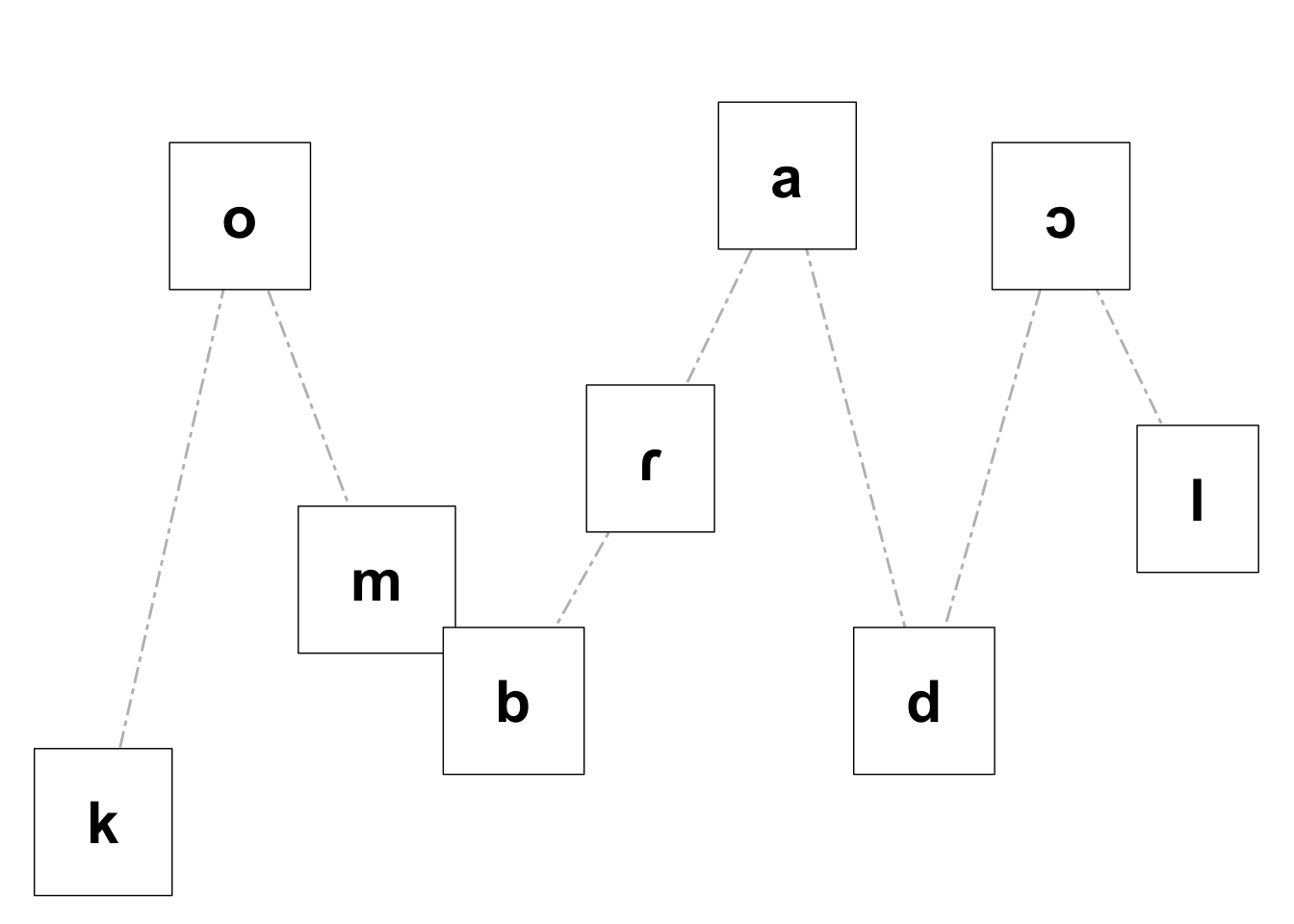

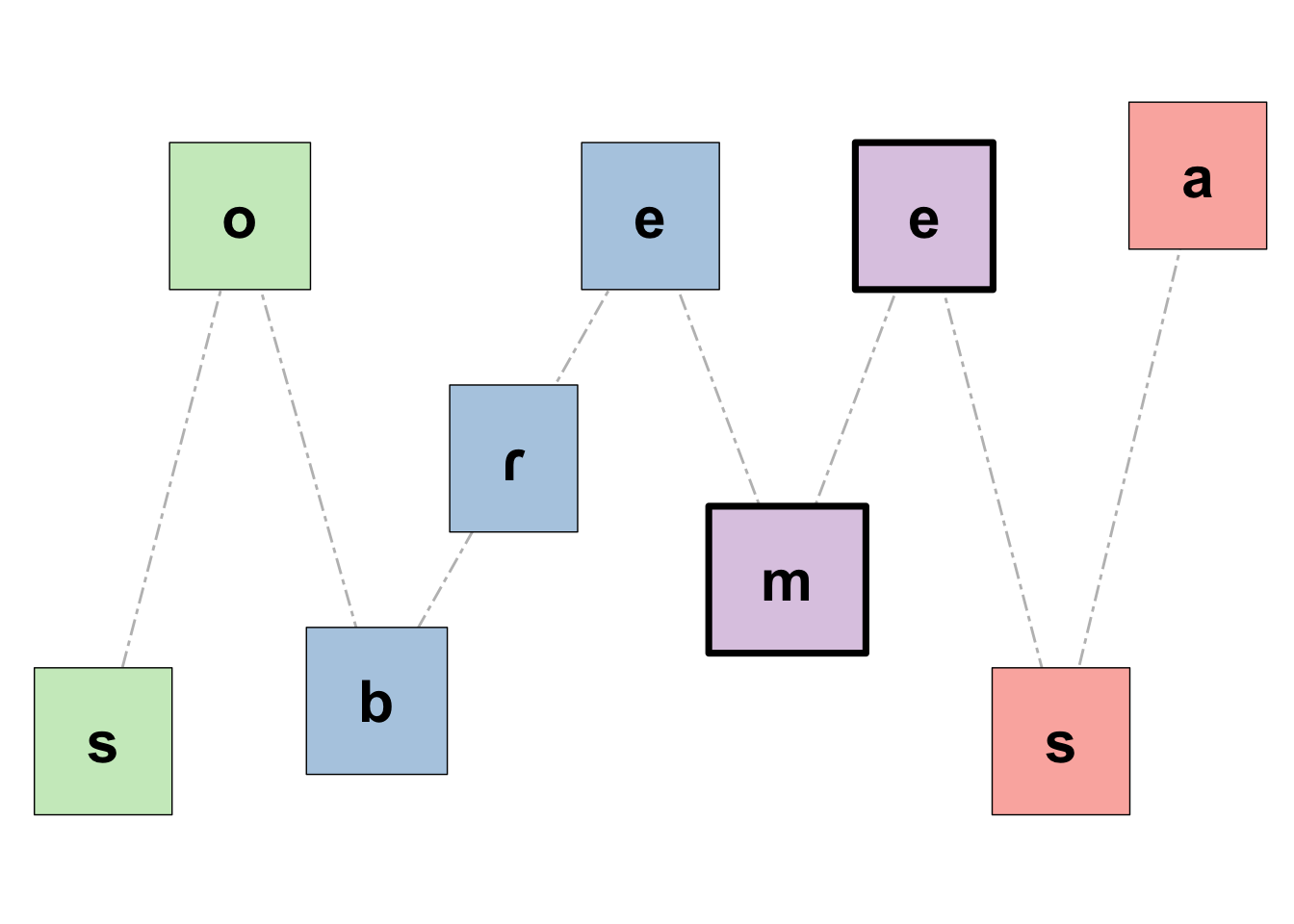

The function plotSon() creates a plot using ggplot2 to visualize the sonority profile of a given word, which must be phonemically transcribed. This is adapted from the Shiny App you can find here. If you want the figure to differentiate the syllables in the word of interest (syl = T), your input must also be syllabified (in that case, the stressed syllable will be highlighted with thicker borders). Finally, if you want to save your figure, simply add save_plot = T to the function. The function has a relatively flexible phonemic inventory. If a phoneme isn’t supported, the function will print it (and the figure won’t be generated). The sonority scale used here can be found in Parker (2011).

"combradol" |>

ipa() |>

plotSon(syl = F)

"sobremesa" |>

ipa(lg = "sp") |>

plotSon(syl = T)

Bigram probabilities

The function biGram_pt() returns the log bigram probability for a possible word in Portuguese (word must be broadly transcribed). The string must use broad phonemic transcription, but no syllabification or stress. The reference used calculate probabilities is the Portuguese Stress Lexicon.

biGram_pt("paklode")

#> [1] -43.11171Two additional functions can be used to explore bigrams: nGramTbl() generates a tibble with phonotactic bigrams from a given text, and plotnGrams() creates a plot for inputs generated with nGramTbl(). Check ?plotnGrams() for more information.

Word generator for Portuguese

The function wug_pt() generates a hypothetical word in Portuguese. Note that this function is meant to be used to get you started with nonce words. You will most likely want to make adjustments based on phonotactic preferences. The function already takes care of some OCP effects and it also prohibits more than one onset cluster per word, since that’s relatively rare in Portuguese. Still, there will certainly be other sequences that sound less natural. The function is not too strict because you may have a wide range of variables in mind as you create novel words. Finally, if you wish to include palatalization, set palatalization = T—if you do that, biGram_pt() will de-palatalize words for its calculation, as it’s based on phonemic transcription.

set.seed(1)

wug_pt(profile = "LHL")

#> [1] "dra.ˈbur.me"# Let's create a table with 5 nonce words

# and their bigram probabilities

set.seed(1)

tibble(word = character(5)) |>

mutate(word = wug_pt("LHL", n = 5),

bigram = word |>

biGram_pt())| word | bigram |

|---|---|

| dra.ˈbur.me | -118.61127 |

| ze.ˈfran.ka | -85.59775 |

| be.ˈʒan.tre | -84.75405 |

| ʒa.ˈgran.fe | -87.60279 |

| me.ˈxes.vro | -100.89858 |

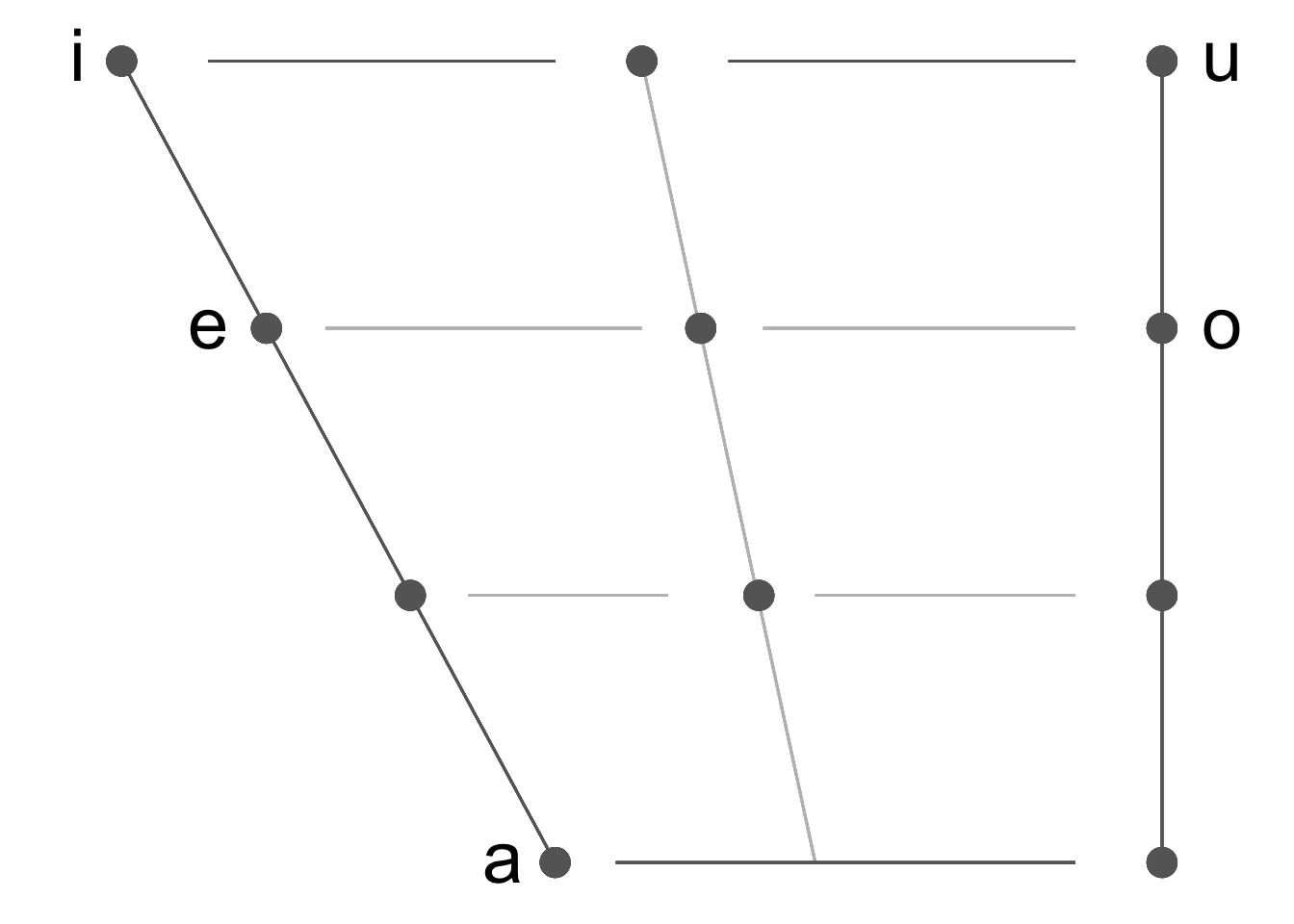

Plotting vowels

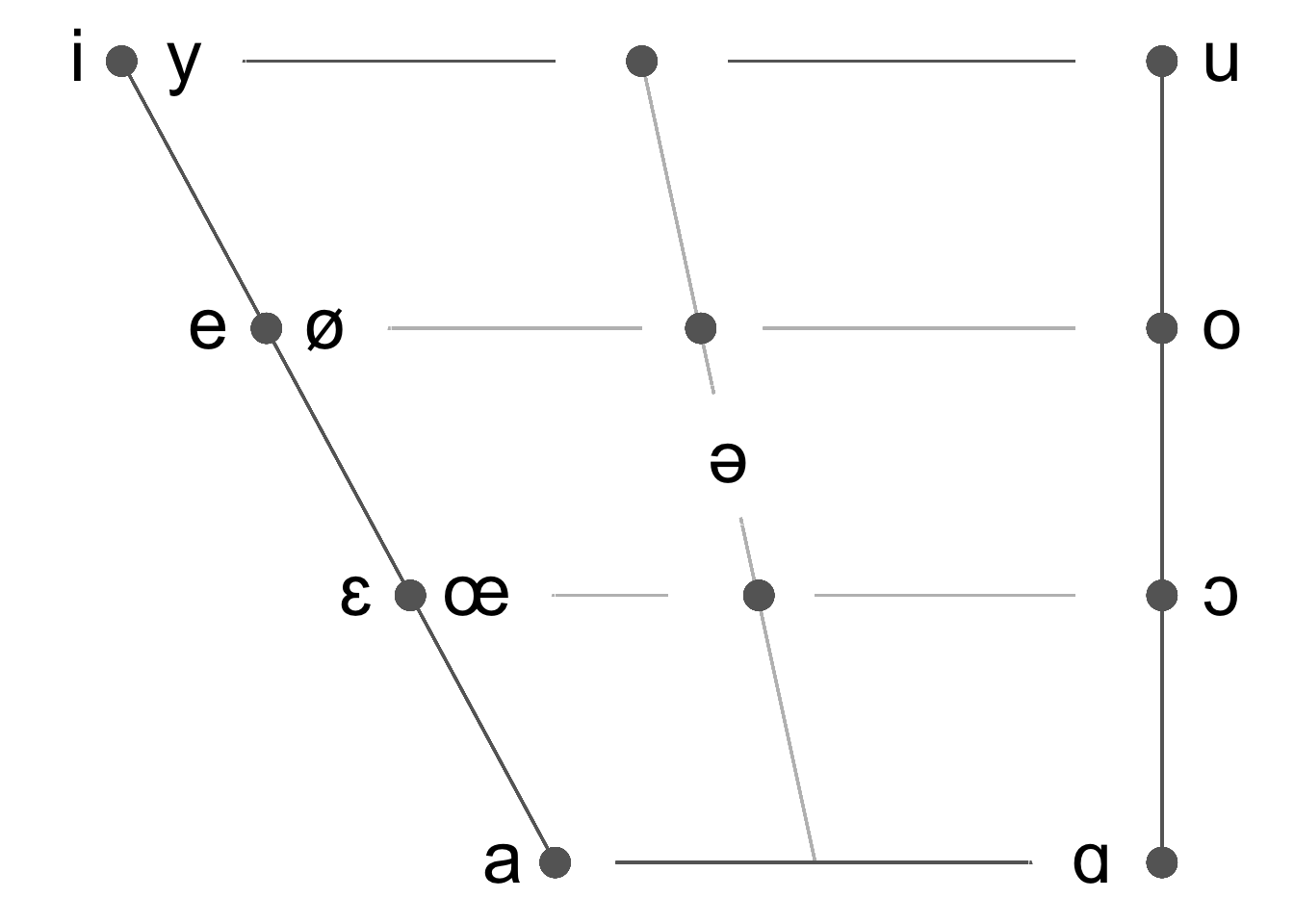

The function plotVowels() creates a vowel trapezoid using ggplot2. If tex = T, the function also saves a tex file with the LaTeX code to create the same trapezoid using the vowel package. Available languages: Arabic, French, English, Dutch, German, Hindi, Italian, Japanese, Korean, Mandarin, Portuguese, Spanish, Swahili, Russian, Talian, Thai, and Vietnamese. Only oral monophthongs are plotted. This function is also implemented as a Shiny App here.

plotVowels(lg = "Spanish", tex = F)

plotVowels(lg = "French", tex = F)

From IPA to TIPA

The function ipa2tipa() takes a phonemically transcribed sequence and returns its tipa equivalent, which can be handy if you use \(\LaTeX\).

"Aqui estão algumas palavras" |>

cleanText() |>

ipa(narrow = T) |>

ipa2tipa()

#> Done! Here's your tex code using TIPA:

#> \textipa{ / a."ki es."t\~{a}\~{w} aw."g\~{u}.mas pa."la.vRas / }

Working with ages in acquisition studies

It’s very common to use the format yy;mm for children’s ages in language acquisition studies. To make it easier to work with this format, two functions have been added to the package: monthsAge(), which returns an age in months given a yy;mm age, and meanAge(), which returns the average age of a vector using the same format (in both functions, you can specify the year-month separator). Here are a couple of examples:

monthsAge(age = "02;06")

#> [1] 30

monthsAge(age = "05:03", sep = ":")

#> [1] 63

meanAge(age = c("02;06", "03;04", NA))

#> [1] "2;11"

meanAge(age = c("05:03", "04:07"), sep = ":")

#> [1] "4:11"Acknowledgements and funding

Parts of this project have benefited from funding from the ENVOL program at Université Laval and from the Social Sciences and Humanities Research Council of Canada (SSHRC). Different undergraduate research assistants at Université Laval have worked on the Spanish and French grapheme-to-phoneme conversion functions: Nicolas C. Bustos, Emmy Dumont, and Linda Wong. Matéo Levesque implemented comprehensive regular expressions for French transcription.

Citing the package

citation("Fonology")

#> To cite Fonology in publications, use:

#>

#> Garcia, Guilherme D. (2026). Fonology: Phonological Analysis in R. R

#> package version 1.0.0 (first published 2023, latest 2026). Available

#> at https://gdgarcia.ca/fonology

#>

#> A BibTeX entry for LaTeX users is

#>

#> @Manual{,

#> title = {Fonology: Phonological Analysis in {R}},

#> author = {Guilherme D. Garcia},

#> note = {R package version 1.0.0 (first published 2023, latest 2026)},

#> year = {2026},

#> url = {https://gdgarcia.ca/fonology},

#> }Copyright © Guilherme Duarte Garcia

References

maxent.ot: An R package for Maximum Entropy constraint grammars. Phonological Data and Analysis, 6(4), 1–44. https://doi.org/10.3765/pda.v6art4.88

Footnotes

Functions without

_pt,_fror_spare language-independent.↩︎